A new year, new PolicyViz Newsletter (Issue #29)

Happy New Year, everyone! I hope you had a lovely holiday season and took some time to rest and relax.

As you may have noticed, I have moved the newsletter from Revue—which is shutting down—over to Substack. I hope this format will be just as useful, if not more, than the Revue newsletter. But you’ll get the same stuff every other week: draft blog posts; notices for new and upcoming podcasts; lists of things I’m reading and watching; and special sneak peeks at videos, conferences, and more.

This week, I have a lengthy blog post for your review. There has been a lot of discussion about critique in the data visualization field lately, which has spurred some thinking on my part about how I—and we, as a community—can do better in how we critique each other’s work. Hopefully, this blog post will be useful for you and give you some things to think about. If you have thoughts on the post and how I might improve it before posting to the PolicyViz website, please let me know. You can email me at jon@policyviz.com, use the contact form on my website, or DM me on Twitter.

Today’s podcast features Vidya Setlur and Bridget Cogley, authors of the really great new book, Functional Aesthetics for Data Visualization. On the next episode, you’ll hear from Stefanie Posavec and Sonja Kuijpers about their work on the new book from Greta Thunberg.

Be sure to check out the rest of the newsletter as well! Lots of good stuff after the post.

Take care and thanks,

Jon

Draft blog post: A Better Path Toward Criticizing Data Visualization

Broadly, data visualization criticisms allow practitioners to further the field, explore new approaches and new dimensions, and understand what works and what doesn’t for different audiences, platforms, and content areas. But the current approach to data visualization critique doesn’t achieve these lofty aims, instead it often stoops to derision or defers to rigidity, which does little to move the field forward. Generally, these critiques fall into two large camps (with some overlap). One camp criticizes what I think most of us would consider “obviously” bad visuals—bad colors, unnecessary elements and icons, too many lines or labels, and, especially, graphs that mislead or misrepresent. I’ll call this the GraphCrimes camp.

In many ways, the work of a critic is easy. We risk very little, yet enjoy a position over those who offer up their work and their selves to our judgment. We thrive on negative criticism, which is fun to write and to read. But the bitter truth we critics must face is that in the grand scheme of things, the average piece of junk is probably more meaningful than our criticism designating it so. -Anton Ego, Ratatouille

The other camp tends to criticize graphs that don’t follow existing norms including bespoke visuals that often break away from perceptually precise (see, e.g., Bertini, Correll, and Franconeri 2008) to create more beautiful or more engaging visuals. I’ll call this the Xenographics camp.

Each camp has problems. GraphCrimes critiques often feel like “hit-and-runs.” Post a graph that goes against our personal aesthetic, make some mocking comment, and move on. This “hit-and-run” critiquing fails to acknowledge various constraints the designer might have faced, the tools they needed to use, the bosses they needed to please.

There are other times when misleading graphs pop up in the GraphCrimes camp, which I think are worthy of criticism and, especially in cases where we believe they are done intentionally, derision. Perhaps this is the best place for us to focus our criticism—to help the world become better educated by highlighting graphs that mislead and misinform.

There is an additional nuance to the GraphCrimes camp. Critiquing graphs that could be clearer or more effective has its own value, especially for those new to the field and learning to build their own skills. Such critiques don’t need to be—and shouldn’t be—the “hit-and-run” approach but can mix the good with the bad—noting possible improvements while acknowledging the various challenges the original designer may have faced. I posted this thread in December about the UK Performance Tracker that I think serves as a decent example of how to mix respect of the original while suggesting some possible tweaks and enhancements. When done with a spirit of positive feedback or education, rather than the hit-and-run approach, these kinds of critiques can be useful.

The Xenographics camp, on the other hand, tends to argue over whether a unique graph or unique element within a graph is somehow “better” than a more standard approach. Although often fun and amusing, these arguments often end up cycling around the same old problems—familiarity, accuracy, speed, and aesthetics. Almost always, the answer boils down to “it depends.”

We can think of the Xenographic camp as nibbling around the edges of a delicious, fancy meal, where you don’t get to the best parts and it doesn’t leave you satisfied, while the GraphCrimes camp is like eating fast food—it’s satisfying for a moment, but you feel bad later.

Speed is not the answer

In both camps, speed is a common reason for critique. “The published graph takes me X seconds; if it was oriented this way or plotted this way, it would take me X minus Y seconds.” But why do we feel like we need to be able to “get” a visualization in some miniscule amount of time? I don’t believe for a second (see what I did there? 😉) that speed should be a central measure of a successful graphic. In fact, from the creator’s perspective, it might be the opposite—we want people to stay with the graph longer and explore, dive in, play.

Again, it depends—because audience matters. A business executive who needs to make quick decisions may want the more familiar graph, but a news organization wants to get eyes on their pages. Elijah Meeks has a nice post, Data Visualization, Fast and Slow, from 2018 on this topic that is very much worth reading (though split across four separate posts).

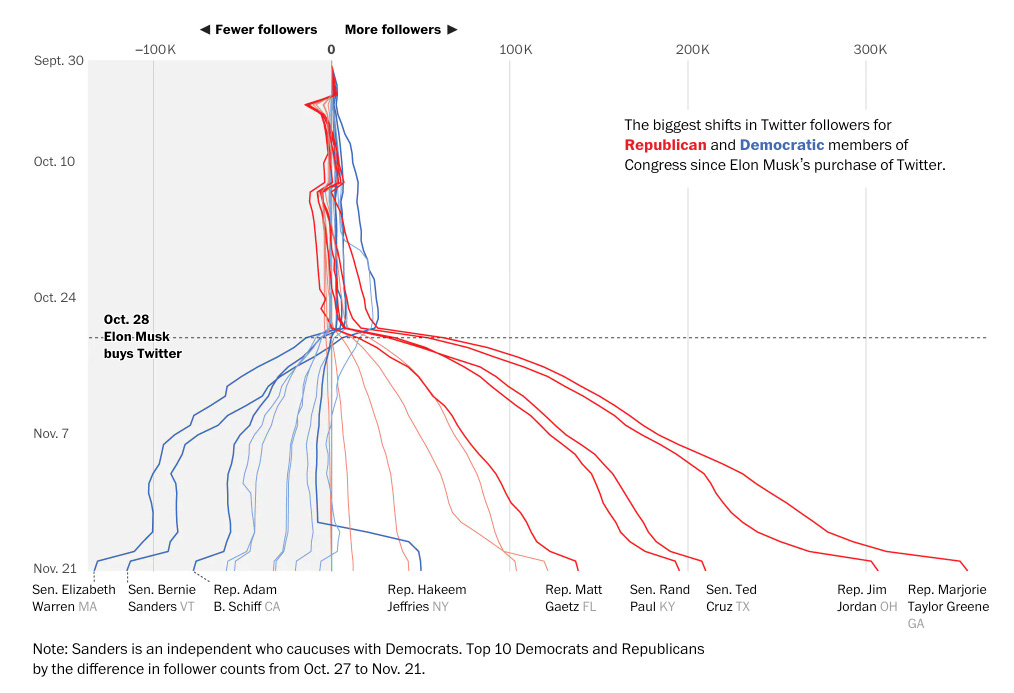

Take the recent example of this vertical line chart from the Washington Post. Here, time is placed along the vertical dimension showing changes in Twitter follower counts for Democratic and Republican lawmakers. (Disclosure: one of the authors of that piece is Luis Melgar, a former Urban Institute colleague.) Many data practitioners criticized this graph for its vertical orientation, which made it disorienting to readers and forced them to take an extra moment or second or minute (or whatever the amount of time is) to read and understand. (Personally, I didn’t find the vertical orientation a stumbling block and really appreciated the blue/red lines shift left/right corresponding to the political leaning. It is also worth noting that the mobile version is horizontally oriented, which is the opposite of how I thought they might lay it out given that we are so likely to scroll vertically on our mobile devices.)

But ultimately, who cares? Who cares how long it takes to understand the graph? Even if it does take you more time to understand it, does it really matter? What if the data were especially relevant to your life or your job? You would probably take extra time to read it anyways, right? On its own, speed doesn’t seem to be the metric we should care about. It’s about understanding. If you see a graph that you don’t understand instantaneously but you don’t take the extra time to learn it, then the graph probably wasn’t that important to you in the first place.

Rules don’t exist

Many in the field argue that there are no “rules” to data visualization. Alberto Cairo put it nicely in a recent blog post when he compared “rules” of data visualization with “rules” of writing:

Beyond a certain and flexible observance of the symbols and grammar of the language we employ, there are really no universal rules for writing that are applicable to all kinds of writing regardless of purpose, context, or audience.

Of course, data visualization does have symbols and a grammar, just like writing. And many have argued that a basic set of rules—possibly better called guidelines or conventions?—is especially useful for people just starting out in the field. But the primary rule should always be ensuring any data visualization can be understood.

To that end, the context and medium in which a visualization is presented is key. Alberto makes an even more important point earlier in his post from last week: “Saying that pie charts are bad regardless of the context in which they are used makes sense only if you are an idealist.” Often, our critiques are thrown off by taking graphs out of context.

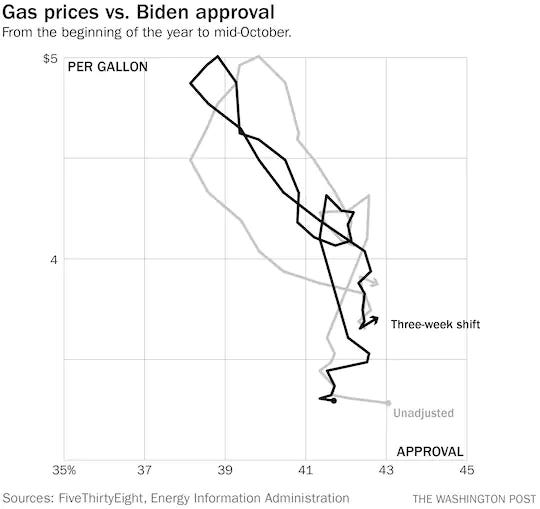

A recent connected scatterplot from Philip Bump at the Washington Post is a great example (you can also listen/watch my conversation with Philip in this episode of the podcast). The graph found people on two sides: one side said many readers don’t know how to read a connected scatterplot so the graph itself should be redesigned. Another side argued the graph effectively showed how the trends moved together whereas placing the data on separate graphs would make that comparison more difficult. (I did my own video critique-of-the-critique.)

Alone, the graph would be difficult to read for people new to the format. But in its original context in Philip’s column, he explains to the reader how to read it in the text that precedes the graph. Removing the graph from that context implies that every graph should be able to stand on its own, which ignores the environments in which and reasons why we often create visualizations.

Data visualization does not work that way. Imagine any bar chart you like. Now remove all of the text—remove the labels, the tick marks, the title, all of it. You don’t know what is being plotted in that chart anymore. That’s because the text helps set the context for the graph.

I recently wrote, “We are not born knowing how to read a bar chart or line chart or pie chart.” More pointedly, as Bridget Cogley wrote in a June 2022 blog post:

Charts are more than pictorial representations of data. They are classifier systems that rely on numbers and words to lend them greater meaning. When preserved, they become idioms of the culture and language that created them.

Although reading surrounding text makes our graphs and charts less immediately discernable, it can make them more effective.

Where do we go from here?

It goes without saying that a lot of these critique conversations are taking place within the data visualization community—among those of us who spend a lot of time thinking about data visualization practices and strategies. I suspect most content creators care less about what data visualization “experts” think of their graphs than they do their regular readers.

It sometimes feels as if we are screaming into the void—a sentiment many have shared with me as I’ve asked questions about critique. Obviously, a better way forward is to encourage discussion and debate.

Critiquing the extremely, obviously bad visualizations only enables us to place our comments in the binary—the graph is either bad for reasons a, b, and c, or it’s good for reasons d, e, and f. But maybe those binary options make the discussion clearer, easier, and more concentrated to the point of making more effective visualizations.

The Xenographic critiques enable us examine, refine, discuss, and debate, even if in the end we have accomplished little else than getting back to “it depends,” which is where we always seem to end up. It depends on the data. It depends on the platform. It depends on the kind of reader. It depends on what the author wants readers to do with the visualization. It depends.

In 2015, Fernanda Viégas and Martin Wattenberg wrote the seminal blog post on data visualization critique. They argued for more respect and more rigor in the everyday debates. They wanted to see critics redesign more visualizations: “A redesign is—or should be—intellectually honest, since it’s using the same data…redesigns are convincing in a democratic way.”

Naturally, of course, there are a lot of ways that our redesigns may not reflect the constraints or goals of the original designer, including the tools they used, the time they had, or the managers breathing down their necks. The word redesign itself (having, in my usage at least, replaced the word “remake”) may need to be replaced with something like “rendition” or “refinement” (both are terms Bridget Cogley and Viday Setlur use in their recent book, Functional Aesthetics for Data Visualization and which we talk about briefly on a recent podcast).

Regardless of what we call it, redesigns/renditions/refinements help the critic concentrate on the content. Creating a graphic forces us to think about whether we should be showing level or change, individual data points or summary measures, segments or part-to-whole. There is enormous value in such exercises, especially for those new to the field learning their own process and aesthetics, because there’s no “right” way to visualize a dataset. Viégas and Wattenberg said as much eight years ago: “We’ll know that visualization has matured as a medium when we see as much criticism about content as we do today about technique.”

Recommendations for new models of critique

This post may not be particularly enlightening for some readers of this site. Perhaps, for better or worse, you’ve engaged in some of these conversations. Perhaps you’ve been on the critiqued side rather than the critic side. Either way, I wonder how many times you feel like you’ve gained something meaningful from those conversations.

Moving forward, data practitioners can begin to employ a more formal framework where a data visualization is judged across different heuristics to describe what makes a good graph and a “better experience.” There are at least three such frameworks that you may want to explore in your own work, either as an individual or in your teams or organizations.

Data Experience (DX) Critique Framework from Visa. Published by Lilach Manheim (and others, I’m sure) at Visa in 2022, DX provides a “more structured and consistent methodology for critiquing data visualization design with a human-centered focus. The DX critique framework leverages existing heuristic frameworks and human-centered design techniques, along with principles of cognitive science and accessibility.” This amazing framework divides a project’s design into six pillars: Purpose, Information Architecture, Data Representation, Visual Hierarchy, Interactivity, and Context. Altogether, there are around 80 questions that prompt the user to think critically about the aspects of the visualization; for example, “Are more important text elements formatted for higher visual priority?”

In an email, Lilach explained to me that the six design pillars “really help the person who is asking for feedback to articulate what exactly they want feedback on. I think this is one of the big gaps in how we approach critique—we need to get better at asking for the feedback that would be most useful to us, at whatever point in the design process we are.” The prompts in the DX framework are less of a binary and mostly open-ended. It’s not so much whether a graph is good or bad, but more of a tool to help you think about how you can make it better.

Functional Aesthetics for Data Visualization by Bridget Cogley and Vidya Setlur. Chaper 16 in this new book from Cogley and Setlur is a 15-page checklist that can be used to evaluate a visualization on a binary yes/no scale. (They haven’t yet published a standalone checklist, though I think that would be immensely valuable.) There are 15 sections to this framework that follow the chapters of the book and consist of more than 100 overall prompts. Although I like the open-ended approach in the Visa framework, I can see the binary scoring approach having advantages for simpler graphs and quicker turnaround times. What I also like about the Cogley/Setlur guide (similar to the Visa framework) is the questions do not propose a “right” or “wrong,” but simply prompt the rater to think critically.

Cogley and Setlur also created a separate “Maturity Matrix” that “can be used to assess visualizations at a high level if evaluating a large number of visualizations. The matrix can provide insight into an overall view of maturity, such as for organizations evaluating their overall practice.” Again, this matrix consists of prompting statements rather than right or wrong guidance and divides the 15 sections into four main categories: Pictographic (i.e., graph type); Perceptual (i.e., visual emphasis); Semantic (i.e., overall space); and Intentional (i.e., structure and layout). I really appreciate the focus on teams here because so many of us don’t work as individuals, but within larger teams and organizations.

Data Visualization Checklist by Evergreen Data and Data Depict Studio. Originally developed by Stephanie Evergreen and Ann Emery in 2014 (and updated in 2016), this checklist is available as a downloadable PDF and as an interactive website. It “is a compilation of 24 guidelines on how graphs should be formatted to best show the story in your data.” The guidelines are broken down into five sections: Text, Arrangement, Color, Lines, and Overall.

Users are prompted to score the visualization on a 0-to-2-point scale (0 for criteria “not met;” 1 for “partially met;” and 2 for “fully met”). Prompts reflect more of a preference than objective design decisions or open-ended thought prompts. The first category, for example, says, “6-12 word title is left-justified in upper left corner. (Short titles enable readers to comprehend takeaway messages even when quickly skimming the graph. Rather than a generic phrase, use a descriptive sentence that encapsulates the graphs finding or ‘so what?’ Western cultures start reading in the upper left, so locate the title there.)”

Personally, I’m not sure a range of 6-to-12 words and located in the upper-left corner is objectively better than some other decision, so how should I score this? I’m also not sold on any quantitative scale, especially when all of the metrics are weighted equally (data accuracy would seem to be more important than a 6-to-12 word title). There are two evaluation reports that used the checklist, one by Sena (Pierce) Sanjines that isn’t linked on the site and that I can’t seem to find anywhere, and another by Evergreen, Lyons, and Rollison that used the checklist to evaluate 88 reports.

Creating better, more effective visualization critiques

We need to move critique in the data visualization field forward. Let’s create a new camp, one that focuses on calling out, criticizing, and correcting visualizations that mislead, misrepresent, and misinform. Let’s provide reasonable alternatives to those graphs, exploring the data presented to explain why the representation is misleading or the underlying data are incorrect.

With this new mission, the three tenets of the Viégas/Wattenberg model for data visualization critique still hold, but I’ll add one more:

Maintain rigor. When critiquing a graph, we need to explain why we think it needs a solution, then offer a solution. We need to keep in mind the context of the original. Even though it might not work for us or for our specific audience, it may work for the original designer.

Respect the designer. Let’s be more respectful of the original design and designer. Offer possible solutions while recognizing that we might be suggesting preferences rather than an objective better way.

Respect the critic. Not every redesign/rendition/refinement needs to be absolutely perfect. A quick sketch might do, but if the underlying motive is to help rather than disparage, a better solution can emerge.

Call out misinformation. Here is what I’m adding to Viégas/Wattenberg, and it reflects the political and societal changes over the last few years. We—as a field populated by experts in data, visualization, and science—need to be explicit and strong about calling out misinformation. We need to fight against work that intends to mislead and, ultimately, to do harm.

Creating a better, more collegial environment to critique data visualization can allow us to teach others and inform our users and stakeholders. We can move beyond screaming into the void about how some graph is objectively better than some other graph because we aren’t as familiar with it or it’s not as clear as we want it to be. Let’s focus our attention on helping expand data visualization skills and curbing the spread of misleading data and graphs.

Special thanks to Alberto Cairo, Bridget Cogley, Ben Jones, Lilach Manheim, Arvind Satyanarayan, and Alan Smith for their comments and suggestions on earlier drafts of this post. All errors are, of course, my own.

PolicyViz Podcast with Vedya Setlur and Bridget Cogley

Check out this week’s episode of the PolicyViz Podcast! I chat with Vidya Setlur and Bridget Cogley, authors of the great new book, Functional Aesthetics for Data Visualization. We talk about the book, their process, shortcuts, and so much more. One of the longer episodes of the podcast in a while!

Things I’m Reading and Watching

Books

Articles

Do No Harm Guide: Centering Accessibility in Data Visualization by Schwabish, Popkin, and Feng

Why Shouldn’t All Charts Be Scatter Plots? Beyond Precision-Driven Visualizations by Bertini, Correll, and Franconeri

Surfacing Visualization Mirages by McNutt, Kindlmann, and Correll

Give Text A Chance: Advocating for Equal Consideration for Language and Visualization by Stokes and Hearst

Striking a Balance: Reader Takeaways and Preferences when Integrating Text and Charts by Stokes et al.

Blog Posts

TV, Movies, and Miscellaneous

Fleishman is in Trouble on Hulu

Physical on Apple TV

Glass Onion: A Knives Out Mystery on Netflix

A Man Called Otto in theaters

The Fabelmans in theaters

Note: As an Amazon Associate I earn from qualifying purchases.

Big Deals at the PolicyViz Shop

I’ve cut prices on many items at the PolicyViz shop including the Match It! data visualization card game, the Graphic Continuum data visualization library sheet, and my Excel e-books. I’ve also updated the data visualization catalog, which now has more than 1,600 graphs, charts, and diagrams, and which you can download for free.